Why Is My Own Writing Being Detected as AI? 9 Fixes That Actually Help (2026)

Tested Jan–Aug 2025 • Updated January 10, 2026 • 10 min read

Short answer: Human essays can be mislabeled as “AI-written” when the style looks overly uniform, generic, or template-like. Detection tools don’t prove misconduct — they estimate the probability of machine-generated text. The fix isn’t tricks; it’s visible authorship: specific sources, lived context, varied rhythm, and a transparent draft trail.

We keep seeing the same DM: “I wrote this myself and it still got flagged. What am I doing wrong?” Honestly, we didn’t expect so many false positives either – until we ran controlled tests. Clean structure, neutral tone, and predictable transitions can look “AI-ish,” especially when your draft lacks concrete examples or personal context. Below we explain why it happens, how professors treat flags, and practical ways to make your authorship obvious without gaming detectors.

Why “Human = AI?” The Pattern Problem

AI detectors don’t read like a professor; they score probability patterns. If your writing marches in lockstep — same sentence length, same transitions, same generic phrasing — the statistical profile can resemble raw AI output. That’s why a careful student who follows every template sometimes gets a higher “AI likelihood” than a messy, exploratory draft. It’s not “your fault” for being clear; it’s just how the math sees sameness.

What Our Tests Showed (And Why We Were Surprised)

Across multiple prompts (900–1,100 words, APA 7, similar sources), our fully human essays usually scored near 0–10% AI-likelihood — but not always. A procedural explainer with uniform sentences once popped in the high teens on a popular checker. When we added lived detail from lecture notes, varied sentence rhythm, and expanded the discussion section, the score dropped on resubmission. The big lesson: authentic context reduces flags far more reliably than paraphrasing tricks.

Before–After: How Style Signals Shift

| Feature | Looks “AI-ish” When… | Looks Authored When… |

|---|---|---|

| Sentence Rhythm | Uniform length, same cadence, repeated scaffolds | Mixed length, occasional asides, emphasis where meaning needs it |

| Transitions | “Firstly/Secondly/Finally” on repeat | Contextual pivots tied to sources or class discussion |

| Evidence | Generic claims with vague citations | Specific page numbers, quotes, figures, lab or lecture artifacts |

| Voice | Detached summary with no viewpoint | Reasoned stance: “we argue…”, “the data suggests…”, “to be fair…” |

| Task Fit | Template text that could fit any course | Course-specific terms, rubric cues, required frameworks |

How Professors Actually Use AI Scores

Most instructors we spoke with treat AI scores as a signal to review, not a verdict. They look at your sources, the argument, and whether your draft history and notes make sense. A flagged paragraph prompts questions like “Where did this idea come from?” If you can show drafts with timestamps, reading notes, and citation manager logs, the conversation usually tilts in your favor. If you can’t show process — that’s when trouble begins.

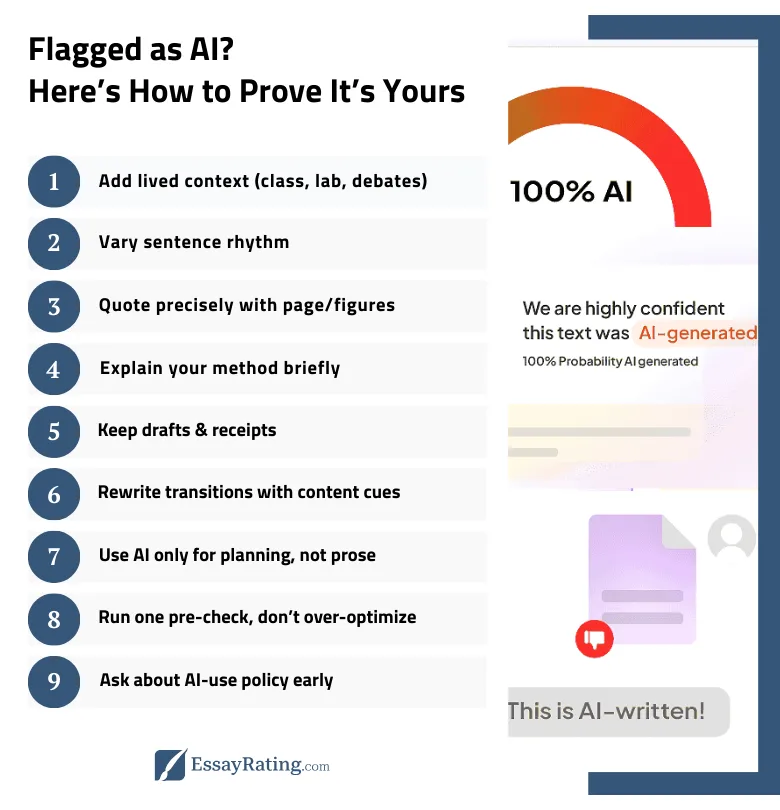

9 Fixes That Actually Help

We tested these on flagged drafts. They don’t promise a zero score; they make your authorship visible and your paper stronger.

- Add lived context. Tie claims to your course: lecture insights, class debates, lab data, field notes. A sentence or two of “what we observed in lab 3” changes the signal more than synonyms ever will.

- Vary sentence rhythm on purpose. Mix short emphasis lines with longer analytical ones. If every sentence is 20–22 words, that sameness can look machine-made.

- Quote precisely, not vaguely. Include page numbers, figures, or dataset IDs. Specific anchors read as human research, not boilerplate.

- Explain your method. Two lines on how you selected sources or analyzed data shows intent — and it’s useful for grading.

- Draft in rounds and keep receipts. Save early outlines, mid-drafts, and reference manager exports. If questioned, you can show how the paper evolved.

- Rewrite transitions to match content. Swap “firstly/secondly” for content-driven pivots: “methodologically,” “by contrast in Smith (2023),” “in our lab replication.”

- Use AI for process, not product. Brainstorm questions or outline blind spots, then write the prose yourself. If the course allows, add a brief note that AI was used only for planning.

- Check once, don’t over-optimize. A pre-check can highlight generic patches, but writing for detectors backfires. Write for your professor: clarity, evidence, logic.

- Ask about policy early. Syllabi differ. If limited AI assistance is allowed with citation, include a one-sentence AI-use note; if it’s banned, don’t risk it.

Soft note from the team: Stuck under a six-hour deadline? Sometimes the academically safer path is a human-edited draft with a verified plagiarism report. Compare options in our in-depth reviews or check a live deal on the promo codes page. We place real orders and test refunds so you don’t have to.

False Positives We’ve Seen (Case Notes)

Case A — Procedural explainer, flagged: a neat step-by-step essay with uniform sentences and generic transitions hit a double-digit AI score. After we added a short “method” paragraph, a concrete example from class, and a few variation sentences, the flag dropped on resubmission.

Case B — Hybrid draft, cleared after revision: we started from a brainstorming outline, then replaced generic sections with course readings, our lab observation, and tighter citations. The checker’s paragraph-level alerts evaporated where we injected authentic context.

Academic Integrity, Plainly

Submitting AI-written text as your own can violate policy even if detectors miss it — and that’s not our play. We use AI for thinking work (questions, outlines, source surfacing), then write, analyze, and cite like humans. If you’re overwhelmed, get legitimate help: tutoring, editing, or a vetted service that provides a plagiarism report and supports revisions. We keep a current list of tested providers plus discounts so you never pay full price.

Proof of Authorship: What to Keep

| Artifact | What It Shows | Pro Tip |

|---|---|---|

| Draft history (docs with timestamps) | Your writing evolved over time | Keep v1, v2, v3; don’t overwrite |

| Outline + notes | You planned the structure yourself | Attach a photo of handwritten notes if allowed |

| Citation manager export (Zotero/Mendeley) | Real sources you actually opened | Include annotations or page notes |

| Data snippets (lab, survey, calc) | Original analysis, not template text | Redact identifiers; keep methods clear |

“My Paper Was Flagged — What Do I Say?”

Be calm and specific. Share your drafts and notes, explain your method, and walk through one paragraph to show how you got from source to claim. We’ve been in that conversation — having tangible artifacts changes the tone immediately. If your department allows a resubmission after revision, use the nine fixes above and document the changes you made.

Final Verdict

False positives happen because detectors score patterns, not intent. Make your authorship obvious: bring in course-specific detail, varied rhythm, precise citations, and a documented workflow. If you do that — and stay within policy — AI flags tend to lose their bite. And if you need help, get it the right way: human support, transparent terms, and a plagiarism report you can show with confidence.

FAQ • Fast Answers Students Actually Search

Related Articles

Essay Services You May Like