Can You Fail a Course for Using AI? What Really Happens to Students

Summary: Yes, you can fail a course for using AI – but not always, and not automatically. It depends on how you used AI, what your university rules say, and how your professor handles academic integrity cases. Most students don’t fail instantly. What usually happens is a review, a conversation, and sometimes a penalty. This guide explains the real process, not the panic version you see on TikTok or Reddit

Updated: January 10, 2026 · 12 min read

Short answer: Yes, using AI can lead to failing a course if it’s considered unauthorized help or misrepresentation of authorship. But in most cases, students don’t fail right away. Professors investigate first, ask questions, and apply penalties based on intent, course policy, and prior history.

A lot of students use AI. Not always to cheat. Sometimes to understand a topic. Sometimes to structure a paper. Sometimes because deadlines pile up and something has to give.

The problem is that universities are still figuring out how to deal with AI. Rules change fast. Enforcement is uneven. And students are left guessing where the line actually is.

This article explains what really happens when AI use is suspected. Not the worst-case horror stories. The real process.

Key Takeaways

- Most students don’t fail a course just for using AI once.

- Penalties depend on how AI was used, not just that it was used.

- Submitting AI-generated text as your own is the biggest risk.

- Professors look for writing inconsistencies, not “AI fingerprints.”

- There are safer ways to get help without risking academic penalties.

Can You Actually Fail a Course for Using AI?

Yes. It happens. But it’s not the default outcome.

Failing a course is usually reserved for serious or repeated violations. Think full AI-generated papers submitted as original work. Or ignoring a clear “no AI” policy. Or lying when asked about your process.

In most cases, professors don’t jump straight to a fail. They start with questions. They want to know how the work was produced. Whether you understand it. Whether the AI replaced your thinking or just supported it.

If your answers make sense and your intent looks reasonable, the outcome is often much lighter.

What Usually Happens First

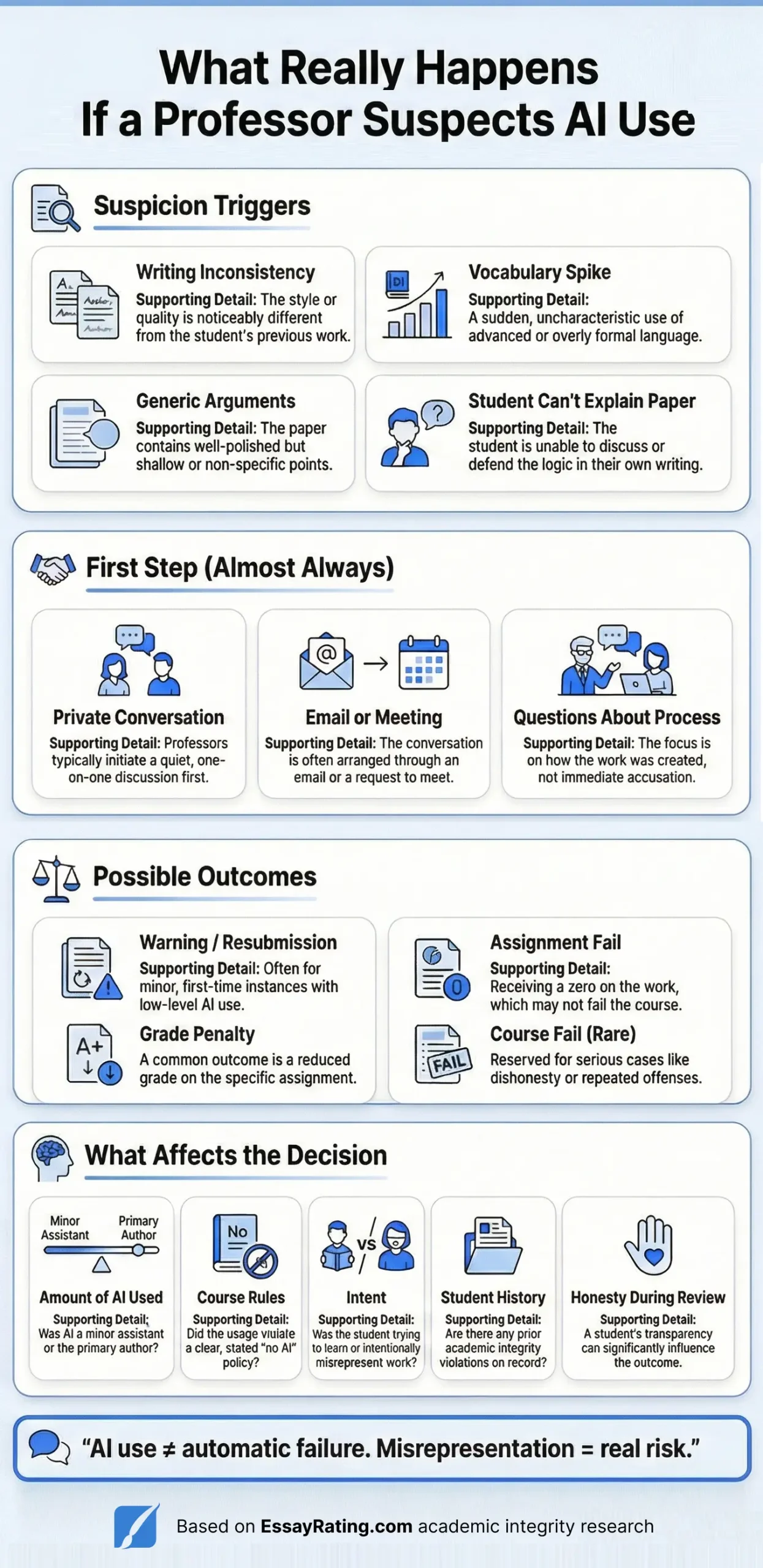

When AI use is suspected, the process is usually quiet at first. A professor notices something off. The writing feels different. More polished. Or oddly generic. Or just not like your previous work.

Instead of accusing you, they may invite you to talk. Sometimes by email. Sometimes after class. Sometimes through an academic integrity office.This stage matters a lot. How you respond can shape everything that comes next.

If you panic, deny everything, or can’t explain your own paper, things escalate fast.

Common Outcomes (From Mild to Severe)

Here’s what actually happens in most cases, based on real university policies.

Warning or resubmission. This is common for first-time or minor cases. Especially if the AI use was limited to structure or wording.

Grade penalty. You might get a zero on the assignment, a reduced grade, or be required to redo the work.

Failing the assignment. This hurts, but it doesn’t always mean failing the course.

Failing the course. Usually reserved for clear misconduct, repeated violations, or dishonesty during the review.

Disciplinary record. In serious cases, the incident may be recorded. This is rare but possible.

Why Some Students Fail and Others Don’t

Two students can use AI in similar ways and get very different outcomes.

Why? Because context matters.

Professors look at:

- Your past writing style

- How much AI was used

- Whether AI replaced thinking or supported it

- Course-specific rules

- Your response when questioned

- Your academic history

Intent matters more than tools.

This is why blanket advice like “AI = instant fail” is misleading.

What Professors Actually Detect

Most professors aren’t hunting for AI. They’re looking for inconsistency.

Sudden jumps in vocabulary. Perfect grammar when previous work wasn’t. Arguments that sound polished but shallow. They also notice when students can’t explain their own logic.

This aligns with what we explained in our guide on how professors detect outsourced writing.

Is Using AI Always Academic Misconduct?

No. And this part gets misunderstood a lot.

Many universities allow AI for brainstorming, outlining, grammar checks, or idea clarification. Problems start when AI replaces authorship.

If the final text is mostly generated by AI and submitted as your own work, that’s where violations happen.

False AI Detection Is Real

AI detectors are not proof machines.

They guess. Sometimes badly. Formal writing, non-native English, or highly structured essays can trigger false positives.

This is why many schools don’t rely on detection scores alone. They look at writing history and student explanations instead.

How Students Get in More Trouble Than Necessary

Most serious penalties happen after mistakes during the investigation.

Common ones:

- Denying obvious AI use

- Claiming “the detector is wrong” without explanation

- Not understanding your own paper

- Admitting full AI generation casually

- Ignoring emails or meetings

Silence and dishonesty make things worse.

Safer Ways to Get Academic Help

If you’re overwhelmed, there are better options than full AI writing. Editing your own draft. Getting feedback. Improving structure. Clarifying arguments.

These forms of help keep you as the author. They also align better with academic integrity rules and reduce risk.

Bottom Line

Yes, you can fail a course for using AI. But most students don’t. Failure usually comes from misuse, not curiosity.

If you understand the rules, keep authorship, and use tools responsibly, the risk drops a lot. The real danger isn’t AI. It’s pretending AI did your thinking.

Frequently Asked Questions

Yes, but it depends on how ai was used. most students don’t fail automatically. penalties usually follow an investigation and depend on intent, course rules, and prior violations

No. many universities allow ai for brainstorming, outlining, or grammar help. it becomes cheating when ai replaces your own thinking or writing

Usually, the professor asks questions or starts a review process. they look at writing consistency, understanding of the topic, and how the work was created

No. ai detection tools show probabilities, not proof. false positives happen, so most schools don’t rely on detection scores alone

Only in serious or repeated cases. minor or first-time issues often result in warnings or grade penalties without permanent records

Expulsion is rare. it usually happens only after repeated violations or clear academic misconduct combined with dishonesty

Both carry risks if they replace your authorship. editing, feedback, and guidance are generally safer than submitting ai-generated or ghostwritten work

Follow course rules, keep your own voice, understand your paper, and use ai only as a support tool — not a replacement

About the Team

Related Articles

Essay Services You May Like