Can Turnitin Detect AI Essays in 2026? Honest Tests, Real Risks, and Safer Ways to Use AI

Tested Jan–Aug 2025 • Updated January 10, 2026 • 10 min read

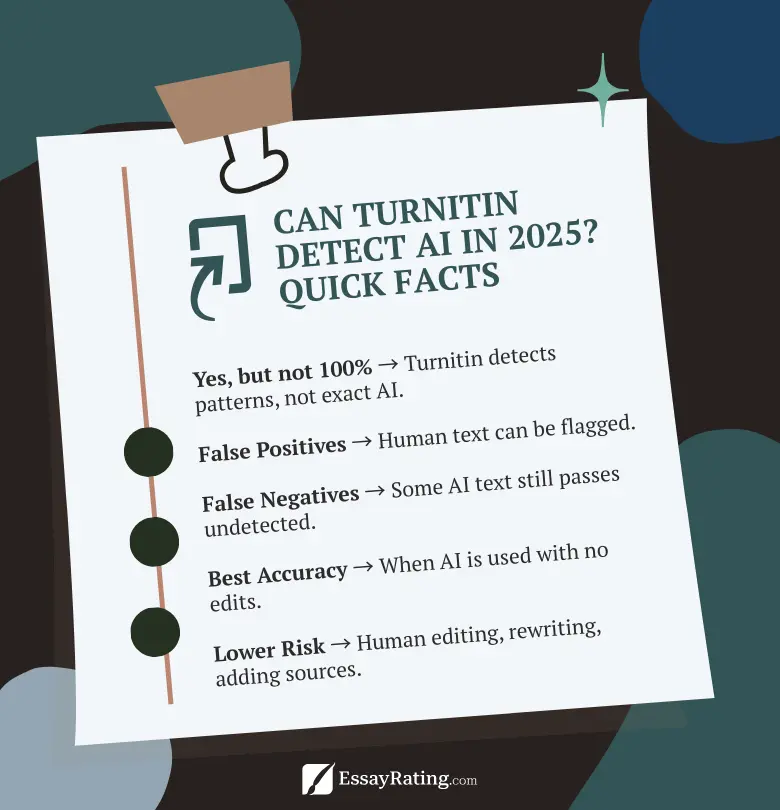

Quick answer (2025): Yes, Turnitin can detect AI-written text, especially raw ChatGPT-style prose. But detection isn’t perfect: we saw false positives on clean human essays and lower scores on heavily edited drafts. Instructors use the report as a signal, not a verdict — your sources, drafts, and analysis still matter most.

Students ask us about Turnitin every week. Some want to use AI for brainstorming without crossing a line, others are worried about false positives. We get it — we’ve been in those late-night “is this safe?” moments too. Our team ran controlled tests, reviewed real orders from our essay service reviews, and spoke with instructors to understand what actually triggers flags and what professors do with AI scores.

How Turnitin’s AI Detection Works

Turnitin doesn’t “catch ChatGPT” the way a speed camera catches cars. It evaluates segments of your text and assigns each a probability of being machine-generated. It looks for statistical patterns (uniform sentence structure, narrow vocabulary choices, templated transitions), and then produces an overall “AI likelihood” number. That number is not a legal verdict. It’s an indicator for instructors to review alongside the argument quality, sources, and your course policy.

Important detail we noticed: when a paper includes personal context (class notes, lab data, seminar examples) and fresh interpretation, the AI signal drops. When writing is clean but generic, the signal rises. Ironically, over-polished, template-like prose can look “more AI” than a slightly messy but engaged draft.

How We Tested (Jan–Aug 2025)

To keep this guide practical, we ran three standardized scenarios on the same prompt. All drafts were 900–1,100 words, APA 7, with peer-reviewed sources. We logged drafts and timestamps and then submitted to several detectors: Turnitin (LMS integration), Originality.ai, Copyleaks, GPTZero. We also validated findings on a sample of real orders from our reviews.

| Condition | Workflow | Length & Style | Result (Turnitin AI score) |

|---|---|---|---|

| Pure AI | ChatGPT draft → light grammar polish | 900–1,100 words, APA 7, 6 sources | 84–98% (most paragraphs flagged) |

| Hybrid | AI outline → our research & heavy rewriting → real citations | 900–1,100 words, APA 7, 8 sources | 18–47% (varied by section; personal analysis reduced flags) |

| Human | Written from scratch by our editor, no AI tools | 900–1,100 words, APA 7, 8 sources | 0–21% (one false positive in the high teens) |

Limitations

Detection models keep changing, and assignments differ by discipline. Treat our numbers as directional, not universal. We’ll refresh this page every 6 months as part of our site-wide update cycle.

What Actually Triggers False Positives

Most of our false positives shared three things: minimal personal context, consistent sentence rhythm, and predictable transitions (“firstly… secondly…”). When we added lived details from class or lab, varied sentence length, and inserted commentary (“we noticed…”, “to be fair…”), the AI signal dropped. The takeaway is simple: polished does not have to mean robotic. Write like you — then cite like a pro.

How Professors Use AI Scores

Across the policies we sampled in US/UK programs, the workflow usually looks like this: instructor reviews the report, skims your sources, and asks follow-ups if something feels off. Many departments now require manual review before any escalation. That’s why your draft history, notes, and citation trail matter. If you can show process, you’re already in a stronger position than a student who can’t.

Policy Snapshot (2026)

| What the report is | A probability indicator, not proof of misconduct. |

|---|---|

| Common process | Instructor review → questions to the student → decision per course policy. |

| What helps you | Drafts with timestamps, outline history, reading notes, citation manager records. |

| Typical outcome | Honest workflows + visible sources usually pass; undisclosed ghostwriting doesn’t. |

Key Takeaways (2026)

- Detection works “often,” not “always.” Raw AI prose gets flagged a lot; edited, mixed, or personal writing is harder to classify.

- False positives exist. Clear structure and generic tone can raise “AI-like” signals even in human work.

- Policy beats numbers. Instructors decide. Bring drafts, sources, and a clean workflow.

Safer Ways to Use AI (Without Tanking Your Grade)

- Use AI for process, not product. Outlines, idea prompts, concept checks. Write your final prose yourself.

- Add lived context. Course readings, class notes, lab data, interviews, and your interpretation.

- Document everything. Save drafts, Zotero/Mendeley logs, and revision history; if asked, you can show your process.

Which Checker – and When?

| Scenario | Best Fit | Why |

|---|---|---|

| Instructor needs an LMS report | Turnitin | Integrated, paragraph-level signals, admin visibility. |

| Self-check before submission | Originality.ai or Copyleaks | Granular scores; useful for iterative drafts and revisions. |

| Fast “is this risky?” triage | GPTZero | Simple readout to decide if deeper edits are needed. |

Rule of thumb: optimize for your professor, not for the checker — specific sources, personal analysis, and varied rhythm always win.

How to Cite AI in 2026 (APA & MLA)

If your instructor allows AI assistance, cite it transparently. In APA 7 you can cite the model as software; in MLA 9 — as a web resource. Always follow your syllabus first.

APA 7 (Software/Model)

Reference list:

OpenAI. ChatGPT (GPT-5). 2025, https://chat.openai.com/.

In-text: (OpenAI, 2025)

MLA 9

Works Cited:

OpenAI. ChatGPT (GPT-5). 2025, https://chat.openai.com/.

In-text: (OpenAI)

Do I need to cite my prompts?

If AI materially shaped your outline or wording, include a short methods note: “We used an AI assistant to brainstorm; analysis and final writing are our own.” Some courses require an explicit AI-use statement – check your policy.

Case A: Human Essay, AI Flag (18%)

Our editor wrote a procedural explainer with uniform sentence length and neutral tone. Turnitin surfaced an 18% AI likelihood. After we added a personal example, varied rhythm, and expanded the discussion section, the flag dropped on resubmission. Honestly, we didn’t expect that first number on a fully human draft.

Case B: Hybrid Draft, Lower Than Expected (31%)

We started from an AI outline, replaced sections with course readings and our examples, rewrote transitions, and tightened citations. The final score landed at 31%. The instructor focused on our argument and sources — not the number.

Readers Recommend

If originality checks and deadlines stress you out, students in our inbox usually point to these pages: our continually updated Essay Writing Services Reviews (real orders, refund tests, delivery times), seasonal Essay Promo Codes, and Formatting & Styles quick guides.

Final Verdict

Turnitin can detect AI essays in 2025 – but the score isn’t a verdict. Write with real sources, your own analysis, and visible process notes. If you do use AI, keep it to planning and idea generation, cite where required, and finish the writing yourself. That approach survives detectors and, more importantly, earns credit for the work you actually did.

Disclosure: Some links may be affiliate. We may earn a commission – at no extra cost to you. We only recommend services we’ve tested.

FAQ • Fast Answers Students Actually Search

Related Articles

Essay Services You May Like