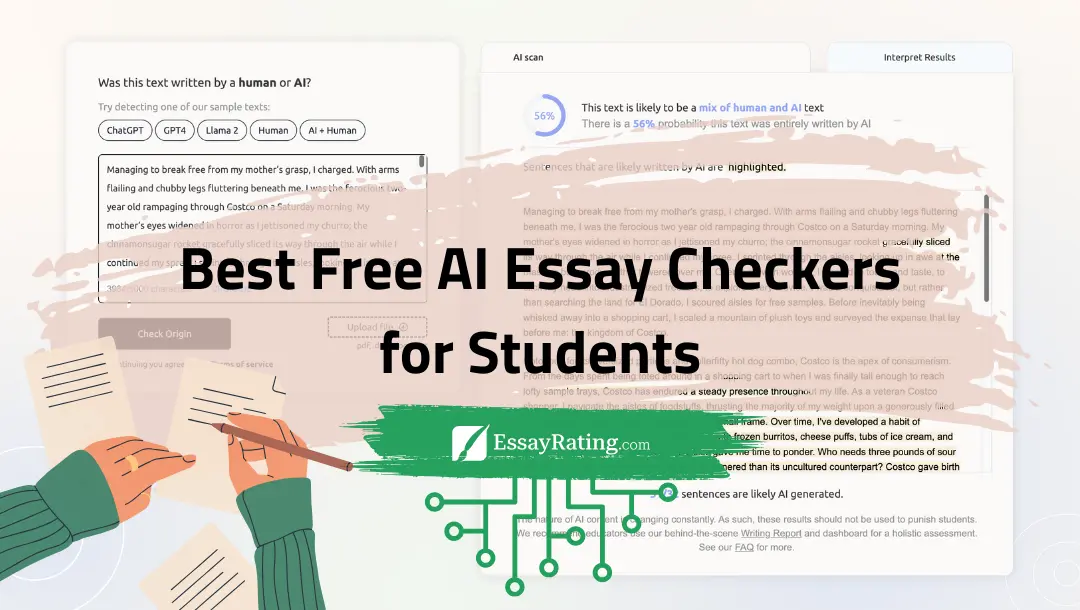

Best Free AI Essay Checkers for Students (2026) • What They Catch & What They Miss

Last updated: January 12, 2026 • 10 min read

Short answer: Free AI essay checkers are useful for a quick pre-check, but none are 100% accurate. They can highlight “AI-like” patches, yet they also trigger false positives on clean human writing. Treat them like smoke alarms, not judges — and always revise for your professor, not for the tool..

We keep testing AI detectors because students ask the same thing every week: “Which free checker actually works?” Honestly, some do help – especially for triage – but over-optimizing to please a detector often makes your writing more robotic. Below, we compare popular free options, show how to use them without tanking your grade, and share a quick fix workflow for flagged paragraphs.

How Free AI Checkers Work (and How They Differ from Turnitin)

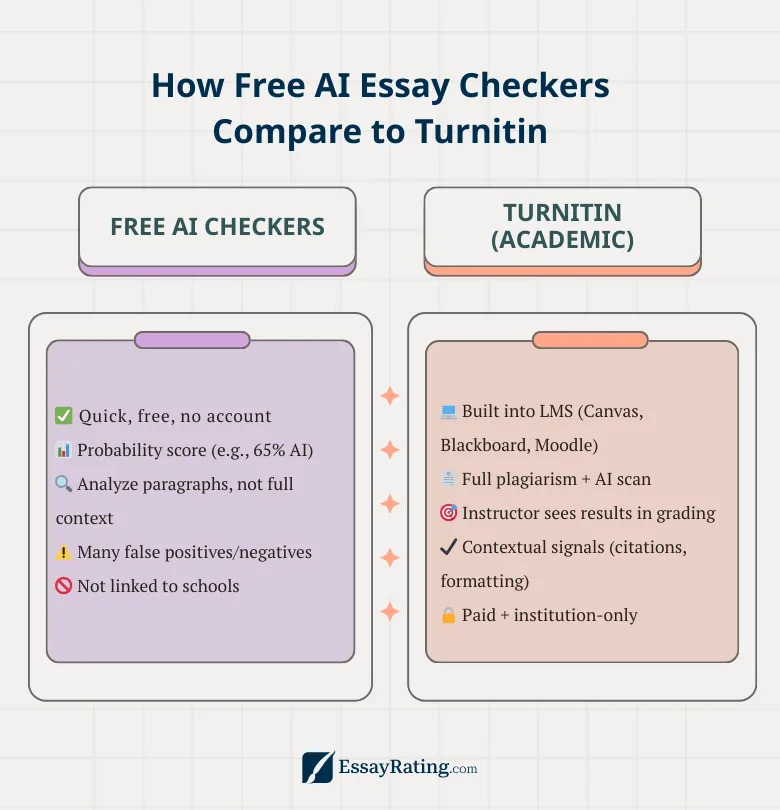

Most free tools estimate the probability that segments of your text were machine-generated by looking at patterns: repetitive rhythm, template transitions, low lexical variety, or suspiciously “even” sentence length. Turnitin, by contrast, is integrated into many LMSs and produces an instructor-facing report that may include paragraph-level signals alongside similarity checks. The key difference isn’t just “accuracy” — it’s context: instructors use Turnitin as one input among many (rubric, sources, your draft trail). Free tools can’t see that context, so take their scores as hints, not verdicts.

Mini Comparison (Free/Try-First Tools)

| Tool | What It’s Good For | Watch Outs | Best Use |

|---|---|---|---|

| GPTZero | Fast triage; simple readout | Limited context; can be conservative | Quick “is this risky?” pass on a paragraph |

| Copyleaks (free tier) | Balanced signals; paragraph feedback | Free limits; occasional inconsistency | Draft check before you revise |

| Sapling Detector | Easy paragraph checks | Less granular rationale | Spot-check transitions and intros |

| Writer AI Detector | Clear UI; rapid feedback | May overflag formulaic prose | Scan for “too generic” patches |

| GLTR / researchy tools | Educational view of token patterns | Not a classroom standard | Learn why uniform text looks “AI-ish” |

How to Read Each Tool’s Feedback

GPTZero. Best as a quick triage for one paragraph. If it lights up your intro, don’t panic: rewrite that section with a specific claim, a course source, and a sharper pivot to your methods.

Copyleaks (free tier). Useful before a full revision. If it highlights generic transitions, replace them with content-based pivots (“in our lab replication…”, “by contrast in Patel, 2023…”). Re-run only after meaningful edits, not synonyms.

Sapling / Writer. Good for spotting “flat” prose. Use their feedback as a hint to add evidence or vary rhythm — not to inflate adjectives.

GLTR / research tools. Educational view of why uniform text looks AI-like. Great for learning; not a classroom standard.

How to Use Free Checkers Without Hurting Your Writing

- Check once, revise for humans. Run a short scan to find generic patches, then rewrite for clarity, evidence, and course context — not to game the score.

- Work at paragraph level. Tools are most helpful when they nudge you to fix a specific intro, transition, or summary — not your entire paper.

Soft note: under a 6-hour deadline, the safer route can be human editing with a verified plagiarism report. Compare options in our in-depth reviews or grab a deal from promo codes. We place real orders and test refunds.

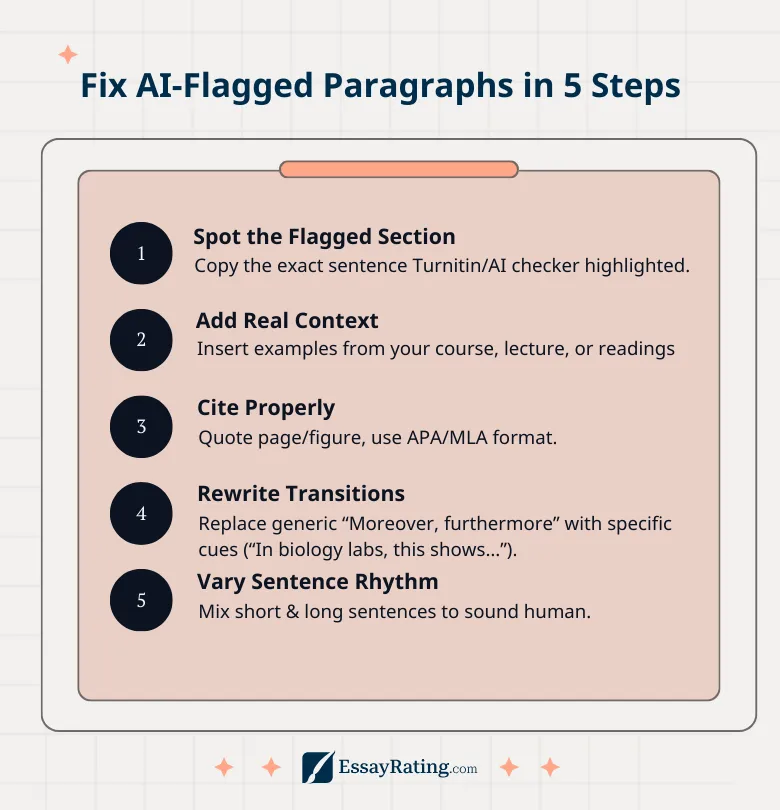

Before → After: What We Fixed on a Flagged Paragraph

Before (flagged): uniform sentence length, generic transitions (“firstly/secondly”), and vague citations (“research shows…”) made the detector uneasy.

After (cleared): we added one concrete classroom example, cited a page number, swapped template transitions for content-based pivots (“methodologically… by contrast in Smith, 2023”), and varied sentence rhythm. The ‘AI-like’ signal dropped without oversimplifying the writing

Which Checker, When?

| Scenario | Pick | Why |

|---|---|---|

| Quick check on 1–2 paragraphs | GPTZero | Simple triage; flags generic patches |

| Full draft pre-check before revising | Copyleaks (free tier) | Balanced feedback; paragraph targeting |

| Learning the “why” behind flags | GLTR / research tools | Shows token-level patterns and uniformity |

Detectors Are Not Judges – Professors Are

Detectors output probabilities, not proof. Instructors review your argument, sources, and draft history. If you ever need to appeal a false flag, bring receipts: outline and notes, drafts with timestamps, citation manager exports (Zotero/Mendeley), and a short methods note explaining how you wrote the paper. That “authorship story” matters more than a single score.

Methods & Limits (How We Evaluated Free Checkers)

We tested detectors on short academic prompts (argument, literature review intro, lab-report discussion) at 900–1,100 words, APA 7, with peer-reviewed sources. Each draft had a clear thesis and citation trail. We produced three variants per prompt: a “generic” version with uniform rhythm, a revised draft with course-specific examples, and a hybrid that mixed paraphrase repairs with fresh analysis. Tools were run on full drafts and on isolated paragraphs to see where signals concentrate.

Limits: detectors change over time, policies differ by course, and genre matters. A lab discussion with data and figure references behaves differently from a broad humanities overview. Treat every score as directional, not definitive. That’s why we recommend editing for the professor’s rubric first, and using checkers only as a flashlight on generic patches.

Before → After (Short Excerpts That Show the Difference)

Before (flagged): “Firstly, research shows social media affects outcomes. Secondly, results indicate significant changes. Finally, it is important to note the implications for future work.”

After (cleared): “Methodologically, our course dataset (n=214) mirrors Patel (2023, p. 47): engagement spikes before deadlines, then collapses. By contrast, Smith (2024) ties the spike to grading policy rather than platform design — a distinction our lab noted in week 6.”

What changed: content-driven transitions, a concrete dataset, page-level citation, and sentence-length variety. The signal dropped without chasing a tool’s score.

Appeal Template (If a Human Draft Was Flagged)

Subject: Request for Manual Review of AI Flag (Course XYZ)

Hi Professor [Name],

I’m writing to request a manual review of the AI flag on my essay. I drafted this paper myself and have attached evidence of authorship: outline (v1), drafts with timestamps (v2, v3), Zotero export with annotated sources, and notes from lectures (weeks 4–6). I’ve also revised the flagged paragraph to include course-specific examples and precise citations.Happy to walk through how I moved from sources to claims. Thank you for considering this request.

Best,

[Your Name]

Quick Glossary

| Term | What It Means |

|---|---|

| AI-likelihood | Probability estimate a segment was machine-generated |

| Paragraph-level flag | Detector highlights a specific passage, not the whole paper |

| Triage check | Fast scan to find generic or risky patches |

| Content-based pivot | Transition tied to evidence (dataset, page, lab note), not a template |

Fast Answers Students Actually Search

Related Articles

Essay Services You May Like